Dearest Rachel –

No, this doesn’t have anything to do with the yard; I don’t even get out there anymore to mow, now that I’ve enlisted a service to take care of that for me (and I admit, I’m not entirely sure what you would think about that. Would you be as reluctant to do so as you were about having a person to clean the inside of the house?). No, I’m talking about getting into some technical details about the latest (for now; these things get more advanced by the month, these days) in AI-based artwork.

I can’t claim to be on the cutting edge of this technology at this point, honey; mostly because I don’t have as much that I need or want to do with it – I may create some T-shirt art in the future, but it’s nothing urgent, as far as I’m concerned. Right now, a lot of people are basically using it as an ultra-sophisticated form of editorial cartoon, especially during the current election season. In fact, it’s gotten so prevalent (and apparently, so sophisticated) that a certain governor tried to outlaw the use of such techniques, and the posting thereof on social media, of such creations as ‘misinformation.’ This has precipitated lawsuits and other such action, and I think the governor has backed down, but you can never be sure.

Meanwhile, all I’ve been wanting to do with it is to create artwork involving you; the sort of thing that rich families living in the stately homes of medieval Europe would have of their own ancestors and loved ones. Granted, I haven’t managed to create much that I’d consider worthy of putting on a canvas and hanging in the house, but the sheer volume of it all makes it hard to choose just one picture for that honor – and it’ll get that much more difficult as I navigate through this new system.

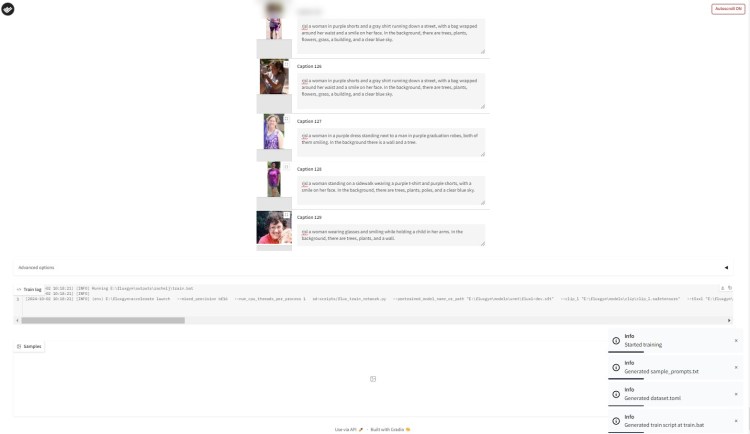

Ah, yes; this new system. You’ve heard me talk about this several times in the last few weeks, as I haven’t been able – either due to external responsibilities, or just the straightforward difficulties in getting it set up in the first place – to get it working satisfactorily. I didn’t want to walk you through it until I knew it would actually work; there’s no point explaining the inner workings of a program that doesn’t accomplish what you want it to – especially when you want to include examples of what it can produce.

Anyway, a quick explanation of the system itself, before going on to what I had to do to get here. The Flux checkpoint was developed by a group of folks that either left or were let go by Stability.ai – the company that developed the original Stable Diffusion models, of which version 1.5 was the one I’ve been using for the last year and a half. They’d developed further models, such as SDXL (which worked with more detail than 512×512 models), but something happened with their version 3.0. I didn’t follow it closely, as I couldn’t figure out how to build a custom dataset in these newer models, but they may have had to incorporate a certain amount of, let’s say, political correctness in the new models, which sat well with neither the programmers nor their users, and as such, it fell flat, leaving a group of about fifteen of them to leave and form their own company and produce this new base model.

Along with the new model have come various programs that allow one to create one’s own LoRAs and the like. So naturally, I was interested; if nothing else, I’ve gathered up a lot more photographs of you since creating the first one in SD1.5.

But all those samples I was looking at after the fact; the real test would be to create something in the ComfyUI setup. I decided to ask it something basic, and merely prompted it for “rjsl (your designation) a woman” and letting it work out any details it might want to. This proved to be a little too much to ask of it:

However, upon looking for suggestions for prompts on a site called PromptHero, I found a fairly simple one that I copied into the Comfy system, asking it to depict you as “a personification of summertime,” and I got some results that I think would have impressed you:

To be sure, some of the illustrations might stretch the limits of your appearance – and you were never one to wear sunglasses (although I can’t recall what you thought of my lenses that change shade in the sunlight, or if they ever interested you) – but I’d say you’re recognizable in most of these. One picture even has you carrying (if not using) nose plugs, which you did, but they were never a part of the picture set I gave the computer to work with, so that’s an impressive deduction on its part.

Now, meanwhile, the all-in-one system has a few bells and whistles I’ve never used before, such as image to image. I tried a couple attempts at converting a picture or two into anime style, and…

Obviously, I have a lot of playing around in this system to do before I get the hang of it. But with that having been said, you might understandably ask me why I’m bothering to work with this new system. Wasn’t I able to produce perfectly good pictures before? Well, yes, but with each new model that comes out, the old ones stop getting models made in them (and the old models disappear, in terms of showing what their triggers are). Without that kind of support, they get harder and harder to use properly. I mentioned that I also wanted to create a LoRA using more of your pictures, to get a better all-around image of you. And on the subject of better all-around images, Flux is supposedly the best at various anatomical issues previous models have had, particularly with hands and limbs.

And then there’s this…

And with that being said, you’ll have to wait a little longer while I see what else I can do. Until then, though, keep an eye on me, honey, and wish me luck. I’m going to need it.

One thought on “Finally Back Into The Weeds”